Changelog

Contents

Changelog¶

2024.4.2¶

Highlights¶

Trivial Merge Implementation¶

The Query Optimizer will inspect quires to determine if a merge(...) or

groupby(...).apply(...) requires a shuffle. A shuffle can be avoided, if the

DataFrame was shuffled on the same columns in a previous step without any operations

in between that change the partitioning layout or the relevant values in each

partition.

>>> result = df.merge(df2, on="a")

>>> result = result.merge(df3, on="a")

The Query optimizer will identify that result was previously shuffled on "a" as

well and thus only shuffle df3 in the second merge operation before doing a blockwise

merge.

Auto-partitioning in read_parquet¶

The Query Optimizer will automatically repartition datasets read from Parquet files if individual partitions are too small. This will reduce the number of partitions in consequentially also the size of the task graph.

The Optimizer aims to produce partitions of at least 75MB and will combine multiple files together if necessary to reach this threshold. The value can be configured by using

>>> dask.config.set({"dataframe.parquet.minimum-partition-size": 100_000_000})

The value is given in bytes. The default threshold is relatively conservative to avoid memory issues on worker nodes with a relatively small amount of memory per thread.

Additional changes

Add GitHub Releases automation (GH#11057) Jacob Tomlinson

Add changelog entries for new release (GH#11058) Patrick Hoefler

Reinstate try/except block in

_bind_property(GH#11049) Lawrence MitchellFix link for query planning docs (GH#11054) Patrick Hoefler

Add config parameter for parquet file size (GH#11052) Patrick Hoefler

Add docs for query optimizer (GH#11043) Patrick Hoefler

Assignment of np.ma.masked to obect-type Array (GH#9627) David Hassell

Don’t error if

dask_expris not installed (GH#11048) Simon Høxbro HansenAdjust

test_set_indexfor “cudf” backend (GH#11029) Richard (Rick) ZamoraUse

to/from_legacy_dataframeinstead ofto/from_dask_dataframe(GH#11025) Richard (Rick) ZamoraTokenize bag

groupbykeys (GH#10734) Charles SternAdd lazy “cudf” registration for p2p-related dispatch functions (GH#11040) Richard (Rick) Zamora

Collect

memrayprofiles on exception (GH#8625) Florian JetterEnsure

inprocproperly emulates serialization protocol (GH#8622) Florian JetterRelax test stats profiling2 (GH#8621) Florian Jetter

Restart workers when

worker-ttlexpires (GH#8538) crusaderkyUse

monotonicfor deadline test (GH#8620) Florian JetterFix race condition for published futures with annotations (GH#8577) Florian Jetter

Scatter by worker instead of

worker->nthreads(GH#8590) MilesSend log-event if worker is restarted because of memory pressure (GH#8617) Patrick Hoefler

Do not print xfailed tests in CI (GH#8619) Florian Jetter

ensure workers are not downscaled when participating in p2p (GH#8610) Florian Jetter

Run against stable

fsspec(GH#8615) Florian Jetter

2024.4.1¶

This is a minor bugfix release that that fixes an error when importing

dask.dataframe with Python 3.11.9.

See GH#11035 and GH#11039 from Richard (Rick) Zamora for details.

Additional changes

Remove skips for named aggregations (GH#11036) Patrick Hoefler

Don’t deep-copy read-only buffers on unpickle (GH#8609) crusaderky

Add

dask-exprtodaskconda recipe (GH#8601) Charles Blackmon-Luca

2024.4.0¶

Highlights¶

Query planning fixes¶

This release contains a variety of bugfixes in Dask DataFrame’s new query planner.

GPU metric dashboard fixes¶

GPU memory and utilization dashboard functionality has been restored. Previously these plots were unintentionally left blank.

See GH#8572 from Benjamin Zaitlen for details.

Additional changes

Build nightlies on tag releases (GH#11014) Charles Blackmon-Luca

Remove

xfailtracebacks from test suite (GH#11028) Patrick HoeflerFix CI for upstream

pandaschanges (GH#11027) Patrick HoeflerFix

value_countsraising if branch exists of nans only (GH#11023) Patrick HoeflerEnable custom expressions in

dask_cudf(GH#11013) Richard (Rick) ZamoraRaise

ImportErrorinstead ofValueErrorwhendask-exprcannot be imported (GH#11007) James LambAdd HypersSpy to

ecosystem.rst(GH#11008) Jonas LähnemannAdd Hugging Face

hf://to the list offsspeccompatible remote services (GH#11012) Quentin LhoestBump

actions/checkoutfrom 4.1.1 to 4.1.2 (GH#11009)Refresh documentation for annotations and spans (GH#8593) crusaderky

Fixup deprecation warning from

pandas(GH#8564) Patrick HoeflerAdd Python 3.11 to GPU CI matrix (GH#8598) Charles Blackmon-Luca

Deadline to use a monotonic timer (GH#8597) crusaderky

Update gpuCI

RAPIDS_VERto24.06(GH#8588)Refactor

restart()andrestart_workers()(GH#8550) crusaderkyBump

actions/checkoutfrom 4.1.1 to 4.1.2 (GH#8587)Include type in failed

sizeofwarning (GH#8580) James Bourbeau

2024.3.1¶

This is a minor release that primarily demotes an exception to a warning if

dask-expr is not installed when upgrading.

Additional changes

Only warn if

dask-expris not installed (GH#11003) Florian JetterFix typos found by codespell (GH#10993) Dimitri Papadopoulos Orfanos

Extra CI job with

dask-exprdisabled (GH#8583) crusaderkyFix flaky

test_restart_waits_for_new_workers(GH#8573) crusaderkyFix flaky

test_raise_on_incompatible_partitions(GH#8571) crusaderky

2024.3.0¶

Released on March 11, 2024

Highlights¶

Query planning¶

This release is enabling query planning by default for all users of

dask.dataframe.

The query planning functionality represents a rewrite of the DataFrame using

dask-expr. This is a drop-in replacement and we expect that most users will

not have to adjust any of their code.

Any feedback can be reported on the Dask issue tracker or on the query planning feedback issue.

If you are encountering any issues you are still able to opt-out by setting

>>> import dask

>>> dask.config.set({'dataframe.query-planning': False})

Sunset of Pandas 1.X support¶

The new query planning backend is requiring at least pandas 2.0. This pandas

version will automatically be installed if you are installing from conda or if

you are installing using dask[complete] or dask[dataframe] from pip.

The legacy DataFrame implementation is still supporting pandas 1.X if you

install dask without extras.

Additional changes

Update tests for pandas nightlies with dask-expr (GH#10989) Patrick Hoefler

Use dask-expr docs as main reference docs for DataFrames (GH#10990) Patrick Hoefler

Adjust from_array test for dask-expr (GH#10988) Patrick Hoefler

Unskip

to_delayedtest (GH#10985) Patrick HoeflerBump conda-incubator/setup-miniconda from 3.0.1 to 3.0.3 (GH#10978)

Fix bug when enabling dask-expr (GH#10977) Patrick Hoefler

Update docs and requirements for dask-expr and remove warning (GH#10976) Patrick Hoefler

Fix numpy 2 compatibility with ogrid usage (GH#10929) David Hoese

Turn on dask-expr switch (GH#10967) Patrick Hoefler

Force initializing the random seed with the same byte order interpret… (GH#10970) Elliott Sales de Andrade

Use correct encoding for line terminator when reading CSV (GH#10972) Elliott Sales de Andrade

perf: do not unnecessarily recalculate input/output indices in _optimize_blockwise (GH#10966) Lindsey Gray

Adjust tests for string option in dask-expr (GH#10968) Patrick Hoefler

Adjust tests for array conversion in dask-expr (GH#10973) Patrick Hoefler

TST: Fix sizeof tests on 32bit (GH#10971) Elliott Sales de Andrade

TST: Add missing skip for pyarrow (GH#10969) Elliott Sales de Andrade

Implement dask-expr conversion for

bag.to_dataframe(GH#10963) Patrick HoeflerClean up Sphinx documentation for

dask.config(GH#10959) crusaderkyUse stdlib

importlib.metadataon Python 3.12+ (GH#10955) wim glennCast partitioning_index to smaller size (GH#10953) Florian Jetter

Reuse dask/dask groupby Aggregation (GH#10952) Patrick Hoefler

ensure tokens on futures are unique (GH#8569) Florian Jetter

Don’t obfuscate fine performance metrics failures (GH#8568) crusaderky

Mark shuffle fast tasks in dask-expr (GH#8563) crusaderky

Weigh gilknocker Prometheus metric by duration (GH#8558) crusaderky

Fix scheduler transition error on memory->erred (GH#8549) Hendrik Makait

Fix flaky test_Future_release_sync (GH#8562) crusaderky

Fix flaky test_flaky_connect_recover_with_retry (GH#8556) Hendrik Makait

typing tweaks in scheduler.py (GH#8551) crusaderky

Bump conda-incubator/setup-miniconda from 3.0.2 to 3.0.3 (GH#8553)

Install dask-expr on CI (GH#8552) Hendrik Makait

P2P shuffle can drop partition column before writing to disk (GH#8531) Hendrik Makait

Better logging for worker removal (GH#8517) crusaderky

Add indicator support to merge (GH#8539) Patrick Hoefler

Bump conda-incubator/setup-miniconda from 3.0.1 to 3.0.2 (GH#8535)

Avoid iteration error when getting module path (GH#8533) James Bourbeau

Ignore stdlib threading module in code collection (GH#8532) James Bourbeau

Fix excessive logging on P2P retry (GH#8511) Hendrik Makait

Prevent typos in retire_workers parameters (GH#8524) crusaderky

Cosmetic cleanup of test_steal (backport from #8185) (GH#8509) crusaderky

Fix flaky test_compute_per_key (GH#8521) crusaderky

Fix flaky test_no_workers_timeout_queued (GH#8523) crusaderky

2024.2.1¶

Released on February 23, 2024

Highlights¶

Allow silencing dask.DataFrame deprecation warning¶

The last release contained a DeprecationWarning that alerts users to an

upcoming switch of dask.dafaframe to use the new backend with support for

query planning (see also GH#10934).

This DeprecationWarning is triggered in import of the dask.dataframe

module and the community raised concerns about this being to verbose.

It is now possible to silence this warning

# via Python

>>> dask.config.set({'dataframe.query-planning-warning': False})

# via CLI

dask config set dataframe.query-planning-warning False

More robust distributed scheduler for rare key collisions¶

Blockwise fusion optimization can cause a task key collision that is not being handled properly by the distributed scheduler (see GH#9888). Users will typically notice this by seeing one of various internal exceptions that cause a system deadlock or critical failure. While this issue could not be fixed, the scheduler now implements a mechanism that should mitigate most occurences and issues a warning if the issue is detected.

See GH#8185 from crusaderky and Florian Jetter for details.

Over the course of this, various improvements to tokenization have been

implemented. See GH#10913, GH#10884, GH#10919, GH#10896 and

primarily GH#10883 from crusaderky for more details.

More robust adaptive scaling on large clusters¶

Adaptive scaling could previously lose data during downscaling if many tasks had to be moved. This typically, but not exclusively, occured on large clusters and would manifest as a recomputation of tasks and could cause clusters to oscillate between up- and downscaling without ever finishing.

See GH#8522 from crusaderky for more details.

Additional changes

Remove flaky fastparquet test (GH#10948) Patrick Hoefler

Enable Aggregation from dask-expr (GH#10947) Patrick Hoefler

Update tests for assign change in dask-expr (GH#10944) Patrick Hoefler

Adjust for pandas large string change (GH#10942) Patrick Hoefler

Fix flaky test_describe_empty (GH#10943) crusaderky

Use Python 3.12 as reference environment (GH#10939) crusaderky

[Cosmetic] Clean up temp paths in test_config.py (GH#10938) crusaderky

[CLI]

dask config setanddask config findupdates. (GH#10930) Milescombine_first when a chunk is full of NaNs (GH#10932) crusaderky

Correctly parse lowercase true/false config from CLI (GH#10926) crusaderky

dask config getfix when printing None values (GH#10927) crusaderkyquery-planning can’t be None (GH#10928) crusaderky

Make nunique faster again (GH#10922) Patrick Hoefler

Clean up some Cython warnings handling (GH#10924) crusaderky

Bump pre-commit/action from 3.0.0 to 3.0.1 (GH#10920)

Raise and avoid data loss of meta provided to P2P shuffle is wrong (GH#8520) Florian Jetter

Fix gpuci: np.product is deprecated (GH#8518) crusaderky

Update gpuCI

RAPIDS_VERto24.04(GH#8471)Unpin ipywidgets on Python 3.12 (GH#8516) crusaderky

Keep old dependencies on run_spec collision (GH#8512) crusaderky

Trivial mypy fix (GH#8513) crusaderky

Ensure large payload can be serialized and sent over comms (GH#8507) Florian Jetter

Allow large graph warning threshold to be configured (GH#8508) Florian Jetter

Tokenization-related test tweaks (backport from #8185) (GH#8499) crusaderky

Tweaks to

update_graph(backport from #8185) (GH#8498) crusaderkyAMM: test incremental retirements (GH#8501) crusaderky

Suppress dask-expr warning in CI (GH#8505) crusaderky

Ignore dask-expr warning in CI (GH#8504) James Bourbeau

Improve tests for P2P stable ordering (GH#8458) Hendrik Makait

Bump pre-commit/action from 3.0.0 to 3.0.1 (GH#8503)

2024.2.0¶

Released on February 9, 2024

Highlights¶

Deprecate Dask DataFrame implementation¶

The current Dask DataFrame implementation is deprecated. In a future release, Dask DataFrame will use new implementation that contains several improvements including a logical query planning. The user-facing DataFrame API will remain unchanged.

The new implementation is already available and can be enabled by

installing the dask-expr library:

$ pip install dask-expr

and turning the query planning option on:

>>> import dask

>>> dask.config.set({'dataframe.query-planning': True})

>>> import dask.dataframe as dd

API documentation for the new implementation is available at https://docs.dask.org/en/stable/dataframe-api.html

Any feedback can be reported on the Dask issue tracker https://github.com/dask/dask/issues

See GH#10912 from Patrick Hoefler for details.

Improved tokenization¶

This release contains several improvements to Dask’s object tokenization logic. More objects now produce deterministic tokens, which can lead to improved performance through caching of intermediate results.

See GH#10898, GH#10904, GH#10876, GH#10874, and GH#10865 from crusaderky for details.

Additional changes

Fix inplace modification on read-only arrays for string conversion (GH#10886) Patrick Hoefler

Add changelog entry for

dask-expr(GH#10915) Patrick HoeflerFix

leftsemimerge forcudf(GH#10914) Patrick HoeflerSlight update to

dask-exprwarning (GH#10916) James BourbeauImprove performance for

groupby.nunique(GH#10910) Patrick HoeflerAdd configuration for

leftsemimerges indask-expr(GH#10908) Patrick HoeflerAdjust assign test for

dask-expr(GH#10907) Patrick HoeflerAvoid

pytest.warnsintest_to_datetimefor GPU CI (GH#10902) Richard (Rick) ZamoraUpdate deployment options in docs homepage (GH#10901) James Bourbeau

Fix typo in dataframe docs (GH#10900) Matthew Rocklin

Bump

peter-evans/create-pull-requestfrom 5 to 6 (GH#10894)Fix mimesis API

>=13.1.0- userandom.randint(GH#10888) MilesAdjust invalid test (GH#10897) Patrick Hoefler

Pickle

da.argwhereandda.count_nonzero(GH#10885) crusaderkyFix

dask-exprtests after singleton pr (GH#10892) Patrick HoeflerAdd a couple of

dask-exprfixes for new parquet cache (GH#10880) Florian JetterUpdate deployment documentation (GH#10882) Matthew Rocklin

Start with

dask-exprdoc build (GH#10879) Patrick HoeflerTest tokenization of static and class methods (GH#10872) crusaderky

Add

distributed.printanddistributed.warnto API docs (GH#10878) James BourbeauRun macos ci on M1 architecture (GH#10877) Patrick Hoefler

Update tests for

dask-expr(GH#10838) Patrick HoeflerUpdate parquet tests to align with

dask-exprfixes (GH#10851) Richard (Rick) ZamoraFix regression in

test_graph_manipulation(GH#10873) crusaderkyAdjust

pytesterrors for dask-expr ci (GH#10871) Patrick HoeflerSet upper bound version for

numbawhenpandas<2.1(GH#10890) MilesDeprecate

methodparameter inDataFrame.fillna(GH#10846) MilesRemove warning filter from

pyproject.toml(GH#10867) Patrick HoeflerSkip

test_append_with_partitionfor fastparquet (GH#10828) Patrick HoeflerFix

pytest8 issues (GH#10868) Patrick HoeflerAdjust test for support of median in

Groupby.aggregateindask-expr(2/2) (GH#10870) Hendrik MakaitAllow length of ascending to be larger than one in

sort_values(GH#10864) Florian JetterAllow other message raised in Python 3.9 (GH#10862) Hendrik Makait

Don’t crash when getting computation code in pathological cases (GH#8502) James Bourbeau

Bump

peter-evans/create-pull-requestfrom 5 to 6 (GH#8494)fix test of

cudfspilling metrics (GH#8478) Mads R. B. KristensenUpgrade to

pytest8 (GH#8482) crusaderkyFix

test_two_consecutive_clients_share_results(GH#8484) crusaderky

2024.1.1¶

Released on January 26, 2024

Highlights¶

Pandas 2.2 and Scipy 1.12 support¶

This release contains compatibility updates for the latest pandas and scipy releases.

See GH#10834, GH#10849, GH#10845, and GH#8474 from crusaderky for details.

Deprecations¶

Deprecate

out=anddtype=parameter in most DataFrame methods (GH#10800) crusaderkyDeprecate

axisingroupbycumulative transformers (GH#10796) MilesRename

shuffletoshuffle_methodin remaining methods (GH#10797) Miles

Additional changes

Add recommended deployment options to deployment docs (GH#10866) James Bourbeau

Improve

_agg_finalizeto confirm to output expectation (GH#10835) Hendrik MakaitImplement deterministic tokenization for hlg (GH#10817) Patrick Hoefler

Refactor: move tests for

tokenize()to its own module (GH#10863) crusaderkyUpdate DataFrame examples section (GH#10856) James Bourbeau

Temporarily pin

mimesis<13.1.0(GH#10860) James BourbeauTrivial cosmetic tweaks to

_testing.py(GH#10857) crusaderkyUnskip and adjust tests for

groupby-aggregate withmedianusingdask-expr(GH#10832) Hendrik MakaitFix test for

sizeof(pd.MultiIndex)in upstream CI (GH#10850) crusaderkynumpy2.0: fix slicing byuint64array (GH#10854) crusaderkyRename

numpyversion constants to matchpandas(GH#10843) crusaderkyBump

actions/cachefrom 3 to 4 (GH#10852)Update gpuCI

RAPIDS_VERto24.04(GH#10841)Fix deprecations in doctest (GH#10844) crusaderky

Changed

dtypearithmetics innumpy2.x (GH#10831) crusaderkyAdjust tests for

mediansupport indask-expr(GH#10839) Patrick HoeflerAdjust tests for

mediansupport ingroupby-aggregateindask-expr(GH#10840) Hendrik Makaitnumpy2.x: fixstd()onMaskedArray(GH#10837) crusaderkyFail

dask-exprci if tests fail (GH#10829) Patrick HoeflerActivate

query_planningwhen exporting tests (GH#10833) Patrick HoeflerExpose dataframe tests (GH#10830) Patrick Hoefler

numpy2: deprecations in n-dimensionalfftfunctions (GH#10821) crusaderkyGeneralize

CreationDispatchfordask-expr(GH#10794) Richard (Rick) ZamoraRemove circular import when

dask-exprenabled (GH#10824) MilesMinor[CI]:

publish-test-resultsnot marked as failed (GH#10825) MilesFix more tests to use

pytest.warns()(GH#10818) Michał Górnynp.unique(): inverse is shaped innumpy2 (GH#10819) crusaderkyPin

test_split_adaptive_filestopyarrowengine (GH#10820) Patrick HoeflerAdjust remaining tests in

dask/dask(GH#10813) Patrick HoeflerRestrict test to Arrow only (GH#10814) Patrick Hoefler

Filter warnings from

stdtest (GH#10815) Patrick HoeflerAdjust mostly indexing tests (GH#10790) Patrick Hoefler

Updates to deployment docs (GH#10778) Sarah Charlotte Johnson

Adjust

test_to_datetimefordask-exprcompatibility Hendrik MakaitUpstream CI tweaks (GH#10806) crusaderky

Improve tests for

to_numeric(GH#10804) Hendrik MakaitHandle matrix subclass serialization (GH#8480) Florian Jetter

Use smallest data type for partition column in P2P (GH#8479) Florian Jetter

pandas2.2: fixtest_dataframe_groupby_tasks(GH#8475) crusaderkyBump

actions/cachefrom 3 to 4 (GH#8477)pandas2.2 vs.pyarrow14: deprecatedDatetimeTZBlock(GH#8476) crusaderkypandas2.2.0: Deprecated frequency aliasMin favor ofME(GH#8473) Hendrik MakaitFix docs build (GH#8472) Hendrik Makait

Fix P2P-based joins with explicit

npartitions(GH#8470) Hendrik MakaitNit: hardcode Python version in test report environment (GH#8462) crusaderky

Change

test_report.py- skip bad artifacts indask/dask(GH#8461) MilesReplace all occurrences of

sys.is_finalizing(GH#8449) Florian Jetter

2024.1.0¶

Released on January 12, 2024

Highlights¶

Partial rechunks within P2P¶

P2P rechunking now utilizes the relationships between input and output chunks. For situations that do not require all-to-all data transfer, this may significantly reduce the runtime and memory/disk footprint. It also enables task culling.

See GH#8330 from Hendrik Makait for details.

Fastparquet engine deprecated¶

The fastparquet Parquet engine has been deprecated. Users should migrate to the pyarrow

engine by installing PyArrow and removing

engine="fastparquet" in read_parquet or to_parquet calls.

See GH#10743 from crusaderky for details.

Improved serialization for arbitrary data¶

This release improves serialization robustness for arbitrary data. Previously there were

some cases where serialization could fail for non-msgpack serializable data.

In those cases we now fallback to using pickle.

See GH#8447 from Hendrik Makait for details.

Additional deprecations¶

Deprecate

shufflekeyword in favour ofshuffle_methodfor DataFrame methods (GH#10738) Hendrik MakaitDeprecate automatic argument inference in

repartition(GH#10691) Patrick HoeflerDeprecate

npartitions="auto"forset_index&sort_values(GH#10750) Miles

Additional changes

Avoid shortcut in tasks shuffle that let to data loss (GH#10763) Patrick Hoefler

Ignore data tasks when ordering (GH#10706) Florian Jetter

Add

get_dummiesfromdask-expr(GH#10791) Patrick HoeflerAdjust IO tests for

dask-exprmigration (GH#10776) Patrick HoeflerRemove deprecation warning about

sortandsplit_outingroupby(GH#10788) Patrick HoeflerAddress

pandasdeprecations (GH#10789) Patrick HoeflerImport

distributedonly once inget_scheduler(GH#10771) Florian JetterSimplify GitHub actions (GH#10781) crusaderky

Clean up redundant bits in CI (GH#10768) crusaderky

Update tests for

ufunc(GH#10773) Patrick HoeflerUse

pytest.mark.skipif(DASK_EXPR_ENABLED)(GH#10774) crusaderkyAdjust shuffle tests for

dask-expr(GH#10759) Patrick HoeflerFix some deprecation warnings from

pandas(GH#10749) Patrick HoeflerAdjust shuffle tests for

dask-expr(GH#10762) Patrick HoeflerUpdate

pre-commit(GH#10767) Hendrik MakaitClean up config switches in CI (GH#10766) crusaderky

Improve exception for

validate_key(GH#10765) Hendrik MakaitHandle

datetimeindexesinset_indexwith unknown divisions (GH#10757) Patrick HoeflerAdd hashing for decimals (GH#10758) Patrick Hoefler

Review tests for

is_monotonic(GH#10756) crusaderkyChange argument order in

value_counts_aggregate(GH#10751) Patrick HoeflerAdjust some groupby tests for

dask-expr(GH#10752) Patrick HoeflerRestrict mimesis to

< 12for 3.9 build (GH#10755) Patrick HoeflerDon’t evaluate config in skip condition (GH#10753) Patrick Hoefler

Adjust some tests to be compatible with

dask-expr(GH#10714) Patrick HoeflerMake

dask.array.utilsfunctions more generic to other Dask Arrays (GH#10676) Matthew RocklinRemove duplciate “single machine” section (GH#10747) Matthew Rocklin

Tweak ORC

engine=parameter (GH#10746) crusaderkyAdd pandas 3.0 deprecations and migration prep for

dask-expr(GH#10723) MilesAdd task graph animation to docs homepage (GH#10730) Sarah Charlotte Johnson

Use new Xarray logo (GH#10729) James Bourbeau

Update tab styling on “10 Minutes to Dask” page (GH#10728) James Bourbeau

Update environment file upload step in CI (GH#10726) James Bourbeau

Don’t duplicate unobserved categories in GroupBy.nunqiue if

split_out>1(GH#10716) Patrick HoeflerChangelog entry for

dask.orderupdate (GH#10715) Florian JetterRelax redundant-key check in

_check_dsk(GH#10701) Richard (Rick) ZamoraRevert

picklechange (GH#8456) Florian JetterAdapt

test_report.pyto supportdask/daskrepository (GH#8450) MilesMaintain stable ordering for P2P shuffling (GH#8453) Hendrik Makait

Allow tests workflow to be dispatched manually by maintainers (GH#8445) Erik Sundell

Make scheduler-related transition functionality private (GH#8448) Hendrik Makait

Update

pre-commithooks (GH#8444) Hendrik MakaitDo not always check if

__main__ in resultwhen pickling (GH#8443) Florian JetterDelegate

wait_for_workersto cluster instances only when implemented (GH#8441) Erik SundellExtend sleep in

test_pandas(GH#8440) Julian GilbeyAvoid deprecated

shufflekeyword (GH#8439) Hendrik MakaitShuffle metrics 4/4: Remove bespoke diagnostics (GH#8367) crusaderky

Do not run

gilknockerin testsuite (GH#8423) Florian JetterTweak

abstractmethods(GH#8427) crusaderkyShuffle metrics 3/4: Capture background metrics (GH#8366) crusaderky

Shuffle metrics 2/4: Add background metrics (GH#8365) crusaderky

Shuffle metrics 1/4: Add foreground metrics (GH#8364) crusaderky

Bump

actions/upload-artifactfrom 3 to 4 (GH#8420)Fix

test_merge_p2p_shuffle_reused_dataframe_with_different_parameters(GH#8422) Hendrik MakaitImprove logging in P2P’s scheduler plugin (GH#8410) Hendrik Makait

Re-enable

test_decide_worker_coschedule_order_neighbors(GH#8402) Florian JetterAdd cuDF spilling statistics to RMM/GPU memory plot (GH#8148) Charles Blackmon-Luca

Fix inconsistent hashing for Nanny-spawned workers (GH#8400) Charles Stern

Do not allow workers to downscale if they are running long-running tasks (e.g.

worker_client) (GH#7481) Florian JetterFix flaky

test_subprocess_cluster_does_not_depend_on_logging(GH#8417) crusaderky

2023.12.1¶

Released on December 15, 2023

Highlights¶

Logical Query Planning now available for Dask DataFrames¶

Dask DataFrames are now much more performant by using a logical query planner. This feature is currently off by default, but can be turned on with:

dask.config.set({"dataframe.query-planning": True})

You also need to have dask-expr installed:

pip install dask-expr

We’ve seen promising performance improvements so far, see this blog post and these regularly updated benchmarks for more information. A more detailed explanation of how the query optimizer works can be found in this blog post.

This feature is still under active development and the API isn’t stable yet, so breaking changes can occur. We expect to make the query optimizer the default early next year.

See GH#10634 from Patrick Hoefler for details.

Dtype inference in read_parquet¶

read_parquet will now infer the Arrow types pa.date32(), pa.date64() and

pa.decimal() as a ArrowDtype in pandas. These dtypes are backed by the

original Arrow array, and thus avoid the conversion to NumPy object. Additionally,

read_parquet will no longer infer nested and binary types as strings, they will

be stored in NumPy object arrays.

See GH#10698 and GH#10705 from Patrick Hoefler for details.

Scheduling improvements to reduce memory usage¶

This release includes a major rewrite to a core part of our scheduling logic. It

includes a new approach to the topological sorting algorithm in dask.order

which determines the order in which tasks are run. Improper ordering is known to

be a major contributor to too large cluster memory pressure.

Updates in this release fix a couple of performance regressions that were introduced

in the release 2023.10.0 (see GH#10535). Generally, computations should now

be much more eager to release data if it is no longer required in memory.

See GH#10660, GH#10697 from Florian Jetter for details.

Improved P2P-based merging robustness and performance¶

This release contains several updates that fix a possible deadlock introduced in 2023.9.2 and improve the robustness of P2P-based merging when the cluster is dynamically scaling up.

See GH#8415, GH#8416, and GH#8414 from Hendrik Makait for details.

Removed disabling pickle option¶

The distributed.scheduler.pickle configuration option is no longer supported.

As of the 2023.4.0 release, pickle is used to transmit task graphs, so can no

longer be disabled. We now raise an informative error when distributed.scheduler.pickle

is set to False.

See GH#8401 from Florian Jetter for details.

Additional changes

Add changelog entry for recent P2P merge fixes (GH#10712) Hendrik Makait

Update DataFrame page (GH#10710) Matthew Rocklin

Add changelog entry for

dask-exprswitch (GH#10704) Patrick HoeflerImprove changelog entry for

PipInstallchanges (GH#10711) Hendrik MakaitRemove PR labeler (GH#10709) James Bourbeau

Add

.__wrapped__toDelayedobject (GH#10695) Andrew S. RosenBump

actions/labelerfrom 4.3.0 to 5.0.0 (GH#10689)Bump

actions/stalefrom 8 to 9 (GH#10690)[Dask.order] Remove non-runnable leaf nodes from ordering (GH#10697) Florian Jetter

Update installation docs (GH#10699) Matthew Rocklin

Fix software environment link in docs (GH#10700) James Bourbeau

Avoid converting non-strings to arrow strings for read_parquet (GH#10692) Patrick Hoefler

Bump

xarray-contrib/issue-from-pytest-logfrom 1.2.7 to 1.2.8 (GH#10687)Docs update, fixup styling, mention free (GH#10679) Matthew Rocklin

Update deployment docs (GH#10680) Matthew Rocklin

Dask.order rewrite using a critical path approach (GH#10660) Florian Jetter

Avoid substituting keys that occur multiple times (GH#10646) Florian Jetter

Add missing image to docs (GH#10694) Matthew Rocklin

Bump

actions/setup-pythonfrom 4 to 5 (GH#10688)Update landing page (GH#10674) Matthew Rocklin

Make meta check simpler in dispatch (GH#10638) Patrick Hoefler

Pin PR Labeler (GH#10675) Matthew Rocklin

Reorganize docs index a bit (GH#10669) Matthew Rocklin

Bump

actions/setup-javafrom 3 to 4 (GH#10667)Bump

conda-incubator/setup-minicondafrom 2.2.0 to 3.0.1 (GH#10668)Bump

xarray-contrib/issue-from-pytest-logfrom 1.2.6 to 1.2.7 (GH#10666)Fix

test_categorize_infowith nightlypyarrow(GH#10662) James BourbeauRewrite

test_subprocess_cluster_does_not_depend_on_logging(GH#8409) Hendrik MakaitAvoid

RecursionErrorwhen failing to pickle key inSpillBufferand usingtblib=3(GH#8404) Hendrik MakaitAllow tasks to override

is_rootishheuristic (GH#8412) Hendrik MakaitRemove GPU executor (GH#8399) Hendrik Makait

Do not rely on logging for subprocess cluster (GH#8398) Hendrik Makait

Update gpuCI

RAPIDS_VERto24.02(GH#8384)Bump

actions/setup-pythonfrom 4 to 5 (GH#8396)Ensure output chunks in P2P rechunking are distributed homogeneously (GH#8207) Florian Jetter

Trivial: fix typo (GH#8395) crusaderky

Bump

JamesIves/github-pages-deploy-actionfrom 4.4.3 to 4.5.0 (GH#8387)Bump

conda-incubator/setup-miniconda from3.0.0 to 3.0.1 (GH#8388)

2023.12.0¶

Released on December 1, 2023

Highlights¶

PipInstall restart and environment variables¶

The distributed.PipInstall plugin now has more robust restart logic and also supports

environment variables.

Below shows how users can use the distributed.PipInstall plugin and a TOKEN environment

variable to securely install a package from a private repository:

from dask.distributed import PipInstall

plugin = PipInstall(packages=["private_package@git+https://${TOKEN}@github.com/dask/private_package.git])

client.register_plugin(plugin)

See GH#8374, GH#8357, and GH#8343 from Hendrik Makait for details.

Bokeh 3.3.0 compatibility¶

This release contains compatibility updates for using bokeh>=3.3.0 with proxied Dask dashboards.

Previously the contents of dashboard plots wouldn’t be displayed.

See GH#8347 and GH#8381 from Jacob Tomlinson for details.

Additional changes

Add

networkmarker totest_pyarrow_filesystem_option_real_data(GH#10653) Richard (Rick) ZamoraBump GPU CI to CUDA 11.8 (GH#10656) Charles Blackmon-Luca

Tokenize

pandasoffsets deterministically (GH#10643) Patrick HoeflerAdd tokenize

pd.NAfunctionality (GH#10640) Patrick HoeflerUpdate gpuCI

RAPIDS_VERto24.02(GH#10636)Fix precision handling in

array.linalg.norm(GH#10556) joanrueAdd

axisargument toDataFrame.clipandSeries.clip(GH#10616) Richard (Rick) ZamoraUpdate changelog entry for in-memory rechunking (GH#10630) Florian Jetter

Fix flaky

test_resources_reset_after_cancelled_task(GH#8373) crusaderkyBump GPU CI to CUDA 11.8 (GH#8376) Charles Blackmon-Luca

Bump

conda-incubator/setup-minicondafrom 2.2.0 to 3.0.0 (GH#8372)Add debug logs to P2P scheduler plugin (GH#8358) Hendrik Makait

O(1)access for/info/task/endpoint (GH#8363) crusaderkyRemove stringification from shuffle annotations (GH#8362) crusaderky

Don’t cast

intmetrics tofloat(GH#8361) crusaderkyDrop asyncio TCP backend (GH#8355) Florian Jetter

Add offload support to

context_meter.add_callback(GH#8360) crusaderkyTest that

sync()propagates contextvars (GH#8354) crusaderkycaptured_context_meter(GH#8352) crusaderkycontext_meter.clear_callbacks(GH#8353) crusaderkyUse

@log_errorsdecorator (GH#8351) crusaderkyFix

test_statistical_profiling_cycle(GH#8356) Florian JetterShuffle: don’t parse dask.config at every RPC (GH#8350) crusaderky

Replace

Client.register_pluginsidempotentargument with.idempotentattribute on plugins (GH#8342) Hendrik MakaitFix test report generation (GH#8346) Hendrik Makait

Install

pyarrow-hotfixonmindeps-pandasCI (GH#8344) Hendrik MakaitReduce memory usage of scheduler process - optimize

scheduler.py::TaskStateclass (GH#8331) MilesBump

pre-commitlinters (GH#8340) crusaderkyUpdate cuDF test with explicit

dtype=object(GH#8339) Peter Andreas EntschevFix

Cluster/SpecClustercalls to async close methods (GH#8327) Peter Andreas Entschev

2023.11.0¶

Released on November 10, 2023

Highlights¶

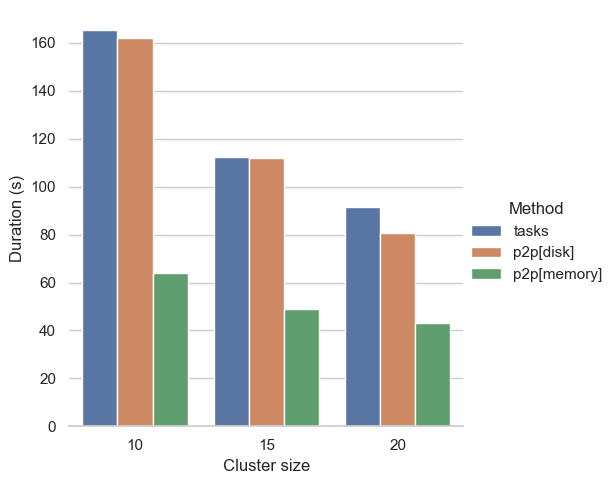

Zero-copy P2P Array Rechunking¶

Users should see significant performance improvements when using in-memory P2P array rechunking. This is due to no longer copying underlying data buffers.

Below shows a simple example where we compare performance of different rechunking methods.

shape = (30_000, 6_000, 150) # 201.17 GiB

input_chunks = (60, -1, -1) # 411.99 MiB

output_chunks = (-1, 6, -1) # 205.99 MiB

arr = da.random.random(size, chunks=input_chunks)

with dask.config.set({

"array.rechunk.method": "p2p",

"distributed.p2p.disk": True,

}):

(

da.random.random(size, chunks=input_chunks)

.rechunk(output_chunks)

.sum()

.compute()

)

See GH#8282, GH#8318, GH#8321 from crusaderky and (GH#8322) from Hendrik Makait for details.

Deprecating PyArrow <14.0.1¶

pyarrow<14.0.1 usage is deprecated starting in this release. It’s recommended for all users to upgrade their

version of pyarrow or install pyarrow-hotfix. See this CVE

for full details.

See GH#10622 from Florian Jetter for details.

Improved PyArrow filesystem for Parquet¶

Using filesystem="arrow" when reading Parquet datasets now properly inferrs the correct cloud region

when accessing remote, cloud-hosted data.

See GH#10590 from Richard (Rick) Zamora for details.

Improve Type Reconciliation in P2P Shuffling¶

See GH#8332 from Hendrik Makait for details.

Additional changes

Fix sporadic failure of

test_dataframe::test_quantile(GH#10625) MilesBump minimum

clickto>=8.1(GH#10623) Jacob TomlinsonAvoid

PerformanceWarningfor fragmented DataFrame (GH#10621) Patrick HoeflerGeneralize computation of

NEW_*_VERin GPU CI updating workflow (GH#10610) Charles Blackmon-LucaSwitch to newer GPU CI images (GH#10608) Charles Blackmon-Luca

Remove double slash in

fsspectests (GH#10605) Mario ŠaškoReenable

test_ucx_config_w_env_var(GH#8272) Peter Andreas EntschevDon’t share

host_arraywhen receiving from network (GH#8308) crusaderkyGeneralize computation of

NEW_*_VERin GPU CI updating workflow (GH#8319) Charles Blackmon-LucaSwitch to newer GPU CI images (GH#8316) Charles Blackmon-Luca

Minor updates to shuffle dashboard (GH#8315) Matthew Rocklin

Don’t use

bytearray().join(GH#8312) crusaderkyReuse identical shuffles in P2P hash join (GH#8306) Hendrik Makait

2023.10.1¶

Released on October 27, 2023

Highlights¶

Python 3.12¶

This release adds official support for Python 3.12.

See GH#10544 and GH#8223 from Thomas Grainger for details.

Additional changes

Avoid splitting parquet files to row groups as aggressively (GH#10600) Matthew Rocklin

Speed up

normalize_chunksfor common case (GH#10579) Martin DurantUse Python 3.11 for upstream and doctests CI build (GH#10596) Thomas Grainger

Bump

actions/checkoutfrom 4.1.0 to 4.1.1 (GH#10592)Switch to PyTables

HEAD(GH#10580) Thomas GraingerRemove

numpy.corewarning filter, link to issue onpyarrowcausedBlockManagerwarning (GH#10571) Thomas GraingerUnignore and fix deprecated freq aliases (GH#10577) Thomas Grainger

Move

register_assert_rewriteearlier inconftestto fix warnings (GH#10578) Thomas GraingerUpgrade

versioneerto 0.29 (GH#10575) Thomas Graingerchange

test_concat_categoricalto be non-strict (GH#10574) Thomas GraingerEnable SciPy tests with NumPy 2.0 Thomas Grainger

Enable tests for scikit-image with NumPy 2.0 (GH#10569) Thomas Grainger

Fix upstream build (GH#10549) Thomas Grainger

Add optimized code paths for

drop_duplicates(GH#10542) Richard (Rick) ZamoraSupport

cudfbackend indd.DataFrame.sort_values(GH#10551) Richard (Rick) ZamoraRename “GIL Contention” to just GIL in chart labels (GH#8305) Matthew Rocklin

Bump

actions/checkoutfrom 4.1.0 to 4.1.1 (GH#8299)Fix dashboard (GH#8293) Hendrik Makait

@log_errorsfor async tasks (GH#8294) crusaderkyAnnotations and better tests for serialize_bytes (GH#8300) crusaderky

Temporarily xfail

test_decide_worker_coschedule_order_neighborsto unblock CI (GH#8298) James BourbeauSkip

xdistandmatplotlibin code samples (GH#8290) Matthew RocklinUse

numpy._coreonnumpy>=2.dev0(GH#8291) Thomas GraingerFix calculation of

MemoryShardsBuffer.bytes_read(GH#8289) crusaderkyAllow P2P to store data in-memory (GH#8279) Hendrik Makait

Upgrade

versioneerto 0.29 (GH#8288) Thomas GraingerAllow

ResourceLimiterto be unlimited (GH#8276) Hendrik MakaitRun

pre-commitautoupdate (GH#8281) Thomas GraingerAnnotate instance variables for P2P layers (GH#8280) Hendrik Makait

Remove worker gracefully should not mark tasks as suspicious (GH#8234) Thomas Grainger

Add signal handling to

dask spec(GH#8261) Thomas GraingerAdd typing for

sync(GH#8275) Hendrik MakaitBetter annotations for shuffle offload (GH#8277) crusaderky

Test minimum versions for p2p shuffle (GH#8270) crusaderky

Run coverage on test failures (GH#8269) crusaderky

Use

aiohttpwith extensions (GH#8274) Thomas Grainger

2023.10.0¶

Released on October 13, 2023

Highlights¶

Reduced memory pressure for multi array reductions¶

This release contains major updates to Dask’s task graph scheduling logic. The updates here significantly reduce memory pressure on array reductions. We anticipate this will have a strong impact on the array computing community.

See GH#10535 from Florian Jetter for details.

Improved P2P shuffling robustness¶

There are several updates (listed below) that make P2P shuffling much more robust and less likely to fail.

See GH#8262, GH#8264, GH#8242, GH#8244, and GH#8235 from Hendrik Makait and GH#8124 from Charles Blackmon-Luca for details.

Reduced scheduler CPU load for large graphs¶

Users should see reduced CPU load on their scheduler when computing large task graphs.

See GH#8238 and GH#10547 from Florian Jetter and GH#8240 from crusaderky for details.

Additional changes

Dispatch the

partd.Encodeclass used for disk-based shuffling (GH#10552) Richard (Rick) ZamoraAdd documentation for hive partitioning (GH#10454) Richard (Rick) Zamora

Add typing to

dask.order(GH#10553) Florian JetterAllow passing

index_col=Falseindd.read_csv(GH#9961) Michael LeslieTighten

HighLevelGraphannotations (GH#10524) crusaderkySupport for latest

ipykernel/ipywidgets(GH#8253) crusaderkyCheck minimal

pyarrowversion for P2P merge (GH#8266) Hendrik MakaitSupport for Python 3.12 (GH#8223) Thomas Grainger

Use

memoryview.nbyteswhen warning on large graph send (GH#8268) crusaderkyRun tests without

gilknocker(GH#8263) crusaderkyDisable ipv6 on MacOS CI (GH#8254) crusaderky

Clean up redundant minimum versions (GH#8251) crusaderky

Clean up use of

BARRIER_PREFIXin scheduler plugin (GH#8252) crusaderkyImprove shuffle run handling in P2P’s worker plugin (GH#8245) Hendrik Makait

Explicitly set

charset=utf-8(GH#8250) crusaderkyTyping tweaks to GH#8239 (GH#8247) crusaderky

Simplify scheduler assertion (GH#8246) crusaderky

Improve typing (GH#8239) Hendrik Makait

Respect cgroups v2 “low” memory limit (GH#8243) Samantha Hughes

Fix

PackageInstallby making it a scheduler plugin (GH#8142) Hendrik MakaitXfail

test_ucx_config_w_env_var(GH#8241) crusaderkySpecClusterresilience to broken workers (GH#8233) crusaderkySuppress

SpillBufferstack traces for cancelled tasks (GH#8232) crusaderkyUpdate annotations after stringification changes (GH#8195) crusaderky

Reduce max recursion depth of profile (GH#8224) crusaderky

Offload deeply nested objects (GH#8214) crusaderky

Fix flaky

test_close_connections(GH#8231) crusaderkyFix flaky

test_popen_timeout(GH#8229) crusaderkyFix flaky

test_adapt_then_manual(GH#8228) crusaderkyPrevent collisions in

SpillBuffer(GH#8226) crusaderkyAllow

retire_workersto run concurrently (GH#8056) Florian JetterFix HTML repr for

TaskStateobjects (GH#8188) Florian JetterFix

AttributeErrorforbuiltin_function_or_methodinprofile.py(GH#8181) Florian JetterFix flaky

test_spans(v2) (GH#8222) crusaderky

2023.9.3¶

Released on September 29, 2023

Highlights¶

Restore previous configuration override behavior¶

The 2023.9.2 release introduced an unintentional breaking change in

how configuration options are overriden in dask.config.get with

the override_with= keyword (see GH#10519).

This release restores the previous behavior.

See GH#10521 from crusaderky for details.

Complex dtypes in Dask Array reductions¶

This release includes improved support for using common reductions

in Dask Array (e.g. var, std, moment) with complex dtypes.

See GH#10009 from wkrasnicki for details.

Additional changes

Bump

actions/checkoutfrom 4.0.0 to 4.1.0 (GH#10532)Match

pandasrevertingapplydeprecation (GH#10531) James BourbeauUpdate gpuCI

RAPIDS_VERto23.12(GH#10526)Temporarily skip failing tests with

fsspec==2023.9.1(GH#10520) James Bourbeau

2023.9.2¶

Released on September 15, 2023

Highlights¶

P2P shuffling now raises when outdated PyArrow is installed¶

Previously the default shuffling method would silently fallback from P2P

to task-based shuffling if an older version of pyarrow was installed.

Now we raise an informative error with the minimum required pyarrow

version for P2P instead of silently falling back.

See GH#10496 from Hendrik Makait for details.

Deprecation cycle for admin.traceback.shorten¶

The 2023.9.0 release modified the admin.traceback.shorten configuration option

without introducing a deprecation cycle. This resulted in failures to create Dask

clusters in some cases. This release introduces a deprecation cycle for this configuration

change.

See GH#10509 from crusaderky for details.

Additional changes

Avoid materializing all iterators in

delayedtasks (GH#10498) James BourbeauOverhaul deprecations system in

dask.config(GH#10499) crusaderkyRemove unnecessary check in

timeseries(GH#10447) Patrick HoeflerUse

register_pluginin tests (GH#10503) James BourbeauMake

preserve_indexexplicit inpyarrow_schema_dispatch(GH#10501) Hendrik MakaitAdd

**kwargssupport forpyarrow_schema_dispatch(GH#10500) Hendrik MakaitCentralize and type

no_default(GH#10495) crusaderky

2023.9.1¶

Released on September 6, 2023

Note

This is a hotfix release that fixes a P2P shuffling bug introduced in the 2023.9.0 release (see GH#10493).

Enhancements¶

Stricter data type for dask keys (GH#10485) crusaderky

Special handling for

NoneinDASK_environment variables (GH#10487) crusaderky

Bug Fixes¶

Fix

_partitionsdtypeinmetaforDataFrame.set_indexandDataFrame.sort_values(GH#10493) Hendrik MakaitHandle

cached_propertydecorators inderived_from(GH#10490) Lawrence Mitchell

Maintenance¶

Bump

actions/checkoutfrom 3.6.0 to 4.0.0 (GH#10492)Simplify some tests that

import distributed(GH#10484) crusaderky

2023.9.0¶

Released on September 1, 2023

Bug Fixes¶

Remove support for

np.int64in keys (GH#10483) crusaderkyFix

_partitionsdtypeinmetafor shuffling (GH#10462) Hendrik MakaitDon’t use exception hooks to shorten tracebacks (GH#10456) crusaderky

Documentation¶

Add

p2pshuffle option to DataFrame docs (GH#10477) Patrick Hoefler

Maintenance¶

Skip failing tests for

pandas=2.1.0(GH#10488) Patrick HoeflerUpdate tests for

pandas=2.1.0(GH#10439) Patrick HoeflerEnable

pytest-timeout(GH#10482) crusaderkyBump

actions/checkoutfrom 3.5.3 to 3.6.0 (GH#10470)

2023.8.1¶

Released on August 18, 2023

Enhancements¶

Adding support for cgroup v2 to

cpu_count(GH#10419) Johan OlssonSupport multi-column

groupbywithsort=Trueandsplit_out>1(GH#10425) Richard (Rick) ZamoraAdd

DataFrame.enforce_runtime_divisionsmethod (GH#10404) Richard (Rick) ZamoraEnable file

mode="x"with asingle_file=Truefor Dask DataFrameto_csv(GH#10443) Genevieve Buckley

Bug Fixes¶

Maintenance¶

Add default

types_mappertofrom_pyarrow_table_dispatchforpandas(GH#10446) Richard (Rick) Zamora

2023.8.0¶

Released on August 4, 2023

Enhancements¶

Fix for

make_timeseriesperformance regression (GH#10428) Irina Truong

Documentation¶

Add

distributed.printto debugging docs (GH#10435) James BourbeauDocumenting compatibility of NumPy functions with Dask functions (GH#9941) Chiara Marmo

Maintenance¶

Use SPDX in

licensemetadata (GH#10437) John A KirkhamRequire

dask[array]indask[dataframe](GH#10357) John A KirkhamUpdate gpuCI

RAPIDS_VERto23.10(GH#10427)Simplify compatibility code (GH#10426) Hendrik Makait

Fix compatibility variable naming (GH#10424) Hendrik Makait

Fix a few errors with upstream

pandasandpyarrow(GH#10412) Irina Truong

2023.7.1¶

Released on July 20, 2023

Note

This release updates Dask DataFrame to automatically convert

text data using object data types to string[pyarrow]

if pandas>=2 and pyarrow>=12 are installed.

This should result in significantly reduced memory consumption and increased computation performance in many workflows that deal with text data.

You can disable this change by setting the dataframe.convert-string

configuration value to False with

dask.config.set({"dataframe.convert-string": False})

Enhancements¶

Convert to

pyarrowstrings if proper dependencies are installed (GH#10400) James BourbeauAvoid

repartitionbeforeshuffleforp2p(GH#10421) Patrick HoeflerAPI to generate random Dask DataFrames (GH#10392) Irina Truong

Speed up

dask.bag.Bag.random_sample(GH#10356) crusaderkyRaise helpful

ValueErrorfor invalid time units (GH#10408) Nat TabrisMake

repartitiona no-op when divisions match (divisions provided as a list) (GH#10395) Nicolas Grandemange

Bug Fixes¶

Use

dataframe.convert-stringinread_parquettoken (GH#10411) James BourbeauCategory

dtypeis lost when concatenatingMultiIndex(GH#10407) Irina TruongFix

FutureWarning: The provided callable...(GH#10405) Irina TruongEnable non-categorical hive-partition columns in

read_parquet(GH#10353) Richard (Rick) ZamoraconcatignoringDataFramewithouth columns (GH#10359) Patrick Hoefler

2023.7.0¶

Released on July 7, 2023

Enhancements¶

Catch exceptions when attempting to load CLI entry points (GH#10380) Jacob Tomlinson

Bug Fixes¶

Fix typo in

_clean_ipython_traceback(GH#10385) Alexander ClausenEnsure that

dfis immutable afterfrom_pandas(GH#10383) Patrick HoeflerWarn consistently for

inplaceinSeries.rename(GH#10313) Patrick Hoefler

Documentation¶

Add clarification about output shape and reshaping in rechunk documentation (GH#10377) Swayam Patil

Maintenance¶

Simplify

astypeimplementation (GH#10393) Patrick HoeflerFix

test_first_and_lastto accommodate deprecatedlast(GH#10373) James BourbeauAdd

leveltocreate_merge_tree(GH#10391) Patrick HoeflerDo not derive from

scipy.stats.chisquaredocstring (GH#10382) Doug Davis

2023.6.1¶

Released on June 26, 2023

Enhancements¶

Remove no longer supported

clip_lowerandclip_upper(GH#10371) Patrick HoeflerSupport

DataFrame.set_index(..., sort=False)(GH#10342) MilesCleanup remote tracebacks (GH#10354) Irina Truong

Add dispatching mechanisms for

pyarrow.Tableconversion (GH#10312) Richard (Rick) ZamoraChoose P2P even if fusion is enabled (GH#10344) Hendrik Makait

Validate that rechunking is possible earlier in graph generation (GH#10336) Hendrik Makait

Bug Fixes¶

Fix issue with

headerpassed toread_csv(GH#10355) GALI PREM SAGARRespect

dropnaandobservedinGroupBy.varandGroupBy.std(GH#10350) Patrick HoeflerFix

H5FD_lockerror when writing to hdf with distributed client (GH#10309) Irina TruongFix for

total_mem_usageofbag.map()(GH#10341) Irina Truong

Deprecations¶

Deprecate

DataFrame.fillna/Series.fillnawithmethod(GH#10349) Irina TruongDeprecate

DataFrame.firstandSeries.first(GH#10352) Irina Truong

Maintenance¶

Deprecate

numpy.compat(GH#10370) Irina TruongFix annotations and spans leaking between threads (GH#10367) Irina Truong

Use general kwargs in

pyarrow_table_dispatchfunctions (GH#10364) Richard (Rick) ZamoraRemove unnecessary

try/exceptinisna(GH#10363) Patrick Hoeflermypysupport for numpy 1.25 (GH#10362) crusaderkyBump

actions/checkoutfrom 3.5.2 to 3.5.3 (GH#10348)Restore

numbainupstreambuild (GH#10330) James BourbeauUpdate nightly wheel index for

pandas/numpy/scipy(GH#10346) Matthew RoeschkeAdd rechunk config values to yaml (GH#10343) Hendrik Makait

2023.6.0¶

Released on June 9, 2023

Enhancements¶

Add missing

not inpredicate support toread_parquet(GH#10320) Richard (Rick) Zamora

Bug Fixes¶

Fix for incorrect

value_counts(GH#10323) Irina TruongUpdate empty

describetop and freq values (GH#10319) James Bourbeau

Documentation¶

Fix hetzner typo (GH#10332) Sarah Charlotte Johnson

Maintenance¶

Test with

numbaandsparseon Python 3.11 (GH#10329) Thomas GraingerRemove

numpy.find_common_typewarning ignore (GH#10311) James BourbeauUpdate gpuCI

RAPIDS_VERto23.08(GH#10310)

2023.5.1¶

Released on May 26, 2023

Note

This release drops support for Python 3.8. As of this release Dask supports Python 3.9, 3.10, and 3.11. See this community issue for more details.

Enhancements¶

Drop Python 3.8 support (GH#10295) Thomas Grainger

Change Dask Bag partitioning scheme to improve cluster saturation (GH#10294) Jacob Tomlinson

Generalize

dd.to_datetimefor GPU-backed collections, introduceget_meta_libraryutility (GH#9881) Charles Blackmon-LucaAdd

na_actiontoDataFrame.map(GH#10305) Patrick HoeflerRaise

TypeErrorinDataFrame.nsmallestandDataFrame.nlargestwhencolumnsis not given (GH#10301) Patrick HoeflerImprove

sizeofforpd.MultiIndex(GH#10230) Patrick HoeflerSupport duplicated columns in a bunch of

DataFramemethods (GH#10261) Patrick HoeflerAdd

numeric_onlysupport toDataFrame.idxminandDataFrame.idxmax(GH#10253) Patrick HoeflerImplement

numeric_onlysupport forDataFrame.quantile(GH#10259) Patrick HoeflerAdd support for

numeric_only=FalseinDataFrame.std(GH#10251) Patrick HoeflerImplement

numeric_only=FalseforGroupBy.cumprodandGroupBy.cumsum(GH#10262) Patrick HoeflerImplement

numeric_onlyforskewandkurtosis(GH#10258) Patrick Hoeflermaskandwhereshould accept acallable(GH#10289) Irina TruongFix conversion from

Categoricaltopa.dictionaryinread_parquet(GH#10285) Patrick Hoefler

Bug Fixes¶

Spurious config on nested annotations (GH#10318) crusaderky

Fix rechunking behavior for dimensions with known and unknown chunk sizes (GH#10157) Hendrik Makait

Enable

dropto support mismatched partitions (GH#10300) James BourbeauFix

divisionsconstruction forto_timestamp(GH#10304) Patrick Hoeflerpandas

ExtensionDtyperaising inSeriesreduction operations (GH#10149) Patrick HoeflerFix regression in

da.randominterface (GH#10247) Eray Aslanda.coarsendoesn’t trim an empty chunk in meta (GH#10281) Irina TruongFix dtype inference for

engine="pyarrow"inread_csv(GH#10280) Patrick Hoefler

Documentation¶

Add

meta_from_arrayto API docs (GH#10306) Ruth ComerUpdate Coiled links (GH#10296) Sarah Charlotte Johnson

Add docs for demo day (GH#10288) Matthew Rocklin

Maintenance¶

Explicitly install

anaconda-clientfrom conda-forge when uploading conda nightlies (GH#10316) Charles Blackmon-LucaConfigure

isortto addfrom __future__ import annotations(GH#10314) Thomas GraingerAvoid

pandasSeries.__getitem__deprecation in tests (GH#10308) James BourbeauIgnore

numpy.find_common_typewarning frompandas(GH#10307) James BourbeauAdd test to check that

DataFrame.__setitem__does not modifydfinplace (GH#10223) Patrick HoeflerClean up default value of

dropnainvalue_counts(GH#10299) Patrick HoeflerAdd

pytest-covtotestextra (GH#10271) James Bourbeau

2023.5.0¶

Released on May 12, 2023

Enhancements¶

Implement

numeric_only=FalseforGroupBy.corrandGroupBy.cov(GH#10264) Patrick HoeflerAdd support for

numeric_only=FalseinDataFrame.var(GH#10250) Patrick HoeflerAdd

numeric_onlysupport toDataFrame.mode(GH#10257) Patrick HoeflerAdd

DataFrame.maptodask.DataFrameAPI (GH#10246) Patrick HoeflerAdjust for

DataFrame.applymapdeprecation and allNAconcatbehaviour change (GH#10245) Patrick HoeflerEnable

numeric_only=FalseforDataFrame.count(GH#10234) Patrick HoeflerDisallow array input in mask/where (GH#10163) Irina Truong

Support

numeric_only=TrueinGroupBy.corrandGroupBy.cov(GH#10227) Patrick HoeflerAdd

numeric_onlysupport toGroupBy.median(GH#10236) Patrick HoeflerSupport

mimesis=9indask.datasets(GH#10241) James BourbeauAdd

numeric_onlysupport tomin,maxandprod(GH#10219) Patrick HoeflerAdd

numeric_only=Truesupport forGroupBy.cumsumandGroupBy.cumprod(GH#10224) Patrick HoeflerAdd helper to unpack

numeric_onlykeyword (GH#10228) Patrick Hoefler

Bug Fixes¶

Fix

clone+from_arrayfailure (GH#10211) crusaderkyFix dataframe reductions for ea dtypes (GH#10150) Patrick Hoefler

Avoid scalar conversion deprecation warning in

numpy=1.25(GH#10248) James BourbeauMake sure transform output has the same index as input (GH#10184) Irina Truong

Fix

corrandcovon a single-row partition (GH#9756) Irina TruongFix

test_groupby_numeric_only_supportedandtest_groupby_aggregate_categorical_observedupstream errors (GH#10243) Irina Truong

Documentation¶

Clean up futures docs (GH#10266) Matthew Rocklin

Maintenance¶

Warn when meta is passed to

apply(GH#10256) Patrick HoeflerRemove

imageioversion restriction in CI (GH#10260) Patrick HoeflerRemove unused

DataFramevariance methods (GH#10252) Patrick HoeflerUn-

xfailtest_categorieswithpyarrowstrings andpyarrow>=12(GH#10244) Irina TruongBump gpuCI

PYTHON_VER3.8->3.9 (GH#10233) Charles Blackmon-Luca

2023.4.1¶

Released on April 28, 2023

Enhancements¶

Implement

numeric_onlysupport forDataFrame.sum(GH#10194) Patrick HoeflerAdd support for

numeric_only=TrueinGroupByoperations (GH#10222) Patrick HoeflerAvoid deep copy in

DataFrame.__setitem__forpandas1.4 and up (GH#10221) Patrick HoeflerAvoid calling

Series.applywith_meta_nonempty(GH#10212) Patrick HoeflerUnpin

sqlalchemyand fix compatibility issues (GH#10140) Patrick Hoefler

Bug Fixes¶

Partially revert default client discovery (GH#10225) Florian Jetter

Support arrow dtypes in

Indexmeta creation (GH#10170) Patrick HoeflerRepartitioning raises with extension dtype when truncating floats (GH#10169) Patrick Hoefler

Adjust empty

Indexfromfastparquettoobjectdtype (GH#10179) Patrick Hoefler

Documentation¶

Update Kubernetes docs (GH#10232) Jacob Tomlinson

Add

DataFrame.reductionto API docs (GH#10229) James BourbeauAdd

DataFrame.persistto docs and fix links (GH#10231) Patrick HoeflerAdd documentation for

GroupBy.transform(GH#10185) Irina TruongFix formatting in random number generation docs (GH#10189) Eray Aslan

Maintenance¶

Pin imageio to

<2.28(GH#10216) Patrick HoeflerAdd note about

importlib_metadatabackport (GH#10207) James BourbeauAdd

xarrayback to Python 3.11 CI builds (GH#10200) James BourbeauAdd

mindepsbuild with all optional dependencies (GH#10161) Charles Blackmon-LucaProvide proper

likevalue forarray_safeinpercentiles_summary(GH#10156) Charles Blackmon-LucaAvoid re-opening hdf file multiple times in

read_hdf(GH#10205) Thomas GraingerAdd merge tests on nullable columns (GH#10071) Charles Blackmon-Luca

Fix coverage configuration (GH#10203) Thomas Grainger

Remove

is_period_dtypeandis_sparse_dtype(GH#10197) Patrick HoeflerBump

actions/checkoutfrom 3.5.0 to 3.5.2 (GH#10201)Avoid deprecated

is_categorical_dtypefrom pandas (GH#10180) Patrick HoeflerAdjust for deprecated

is_interval_dtypeandis_datetime64tz_dtype(GH#10188) Patrick Hoefler

2023.4.0¶

Released on April 14, 2023

Enhancements¶

Override old default values in

update_defaults(GH#10159) Gabe JosephAdd a CLI command to

listandgeta value from dask config (GH#9936) Irina TruongHandle string-based engine argument to

read_json(GH#9947) Richard (Rick) ZamoraAvoid deprecated

GroupBy.dtypes(GH#10111) Irina Truong

Bug Fixes¶

Revert

grouper-related changes (GH#10182) Irina TruongGroupBy.covraising for non-numeric grouping column (GH#10171) Patrick HoeflerUpdates for

Indexsupportingnumpynumeric dtypes (GH#10154) Irina TruongPreserve

dtypefor partitioning columns when read withpyarrow(GH#10115) Patrick HoeflerFix annotations for

to_hdf(GH#10123) Hendrik MakaitHandle

Nonecolumn name when checking if columns are all numeric (GH#10128) Lawrence MitchellFix

valid_divisionswhen passed atuple(GH#10126) Brian PhillipsMaintain annotations in

DataFrame.categorize(GH#10120) Hendrik MakaitFix handling of missing min/max parquet statistics during filtering (GH#10042) Richard (Rick) Zamora

Deprecations¶

Deprecate

use_nullable_dtypes=and adddtype_backend=(GH#10076) Irina TruongDeprecate

convert_dtypeinSeries.apply(GH#10133) Irina Truong

Documentation¶

Document

Generatorbased random number generation (GH#10134) Eray Aslan

Maintenance¶

Update

dataframe.convert_stringtodataframe.convert-string(GH#10191) Irina TruongAdd

python-cityhashto CI environments (GH#10190) Charles Blackmon-LucaTemporarily pin

scikit-imageto fix Windows CI (GH#10186) Patrick HoeflerHandle pandas deprecation warnings for

to_pydatetimeandapply(GH#10168) Patrick HoeflerDrop

bokeh<3restriction (GH#10177) James BourbeauFix failing tests under copy-on-write (GH#10173) Patrick Hoefler

Allow

pyarrowCI to fail (GH#10176) James BourbeauSwitch to

Generatorfor random number generation indask.array(GH#10003) Eray AslanBump

peter-evans/create-pull-requestfrom 4 to 5 (GH#10166)Fix flaky

modfoperation intest_arithmetic(GH#10162) Irina TruongTemporarily remove

xarrayfrom CI withpandas2.0 (GH#10153) James BourbeauFix

update_graphcounting logic intest_default_scheduler_on_worker(GH#10145) James BourbeauFix documentation build with

pandas2.0 (GH#10138) James BourbeauRemove

dask/gpufrom gpuCI update reviewers (GH#10135) Charles Blackmon-LucaUpdate gpuCI

RAPIDS_VERto23.06(GH#10129)Bump

actions/stalefrom 6 to 8 (GH#10121)Use declarative

setuptools(GH#10102) Thomas GraingerRelax

assert_eqchecks onScalar-like objects (GH#10125) Matthew RocklinUpgrade readthedocs config to ubuntu 22.04 and Python 3.11 (GH#10124) Thomas Grainger

Bump

actions/checkoutfrom 3.4.0 to 3.5.0 (GH#10122)Fix

test_null_partition_pyarrowinpyarrowCI build (GH#10116) Irina TruongDrop distributed pack (GH#9988) Florian Jetter

Make

dask.compatibilityprivate (GH#10114) Jacob Tomlinson

2023.3.2¶

Released on March 24, 2023

Enhancements¶

Deprecate

observed=Falseforgroupbywith categoricals (GH#10095) Irina TruongDeprecate

axis=for some groupby operations (GH#10094) James BourbeauThe

axiskeyword inDataFrame.rolling/Series.rollingis deprecated (GH#10110) Irina TruongDataFrame._datadeprecation inpandas(GH#10081) Irina TruongUse

importlib_metadatabackport to avoid CLIUserWarning(GH#10070) Thomas GraingerPort option parsing logic from

dask.dataframe.read_parquettoto_parquet(GH#9981) Anton Loukianov

Bug Fixes¶

Avoid using

dd.shufflein groupby-apply (GH#10043) Richard (Rick) ZamoraEnable null hive partitions with

pyarrowparquet engine (GH#10007) Richard (Rick) ZamoraSupport unknown shapes in

*_likefunctions (GH#10064) Doug Davis

Documentation¶

Add

to_backendmethods to API docs (GH#10093) Lawrence MitchellRemove broken gpuCI link in developer docs (GH#10065) Charles Blackmon-Luca

Maintenance¶

Configure readthedocs sphinx warnings as errors (GH#10104) Thomas Grainger

Un-

xfailtest_division_or_partitionwithpyarrowstrings active (GH#10108) Irina TruongUn-

xfailtest_different_columns_are_allowedwithpyarrowstrings active (GH#10109) Irina TruongRestore Entrypoints compatibility (GH#10113) Jacob Tomlinson

Un-

xfailtest_to_dataframe_optimize_graphwithpyarrowstrings active (GH#10087) Irina TruongOnly run

test_development_guidelines_matches_cion editable install (GH#10106) Charles Blackmon-LucaUn-

xfailtest_dataframe_cull_key_dependencies_materializedwithpyarrowstrings active (GH#10088) Irina TruongInstall

mimesisin CI environments (GH#10105) Charles Blackmon-LucaFix for no module named

ipykernel(GH#10101) Irina TruongFix docs builds by installing

ipykernel(GH#10103) Thomas GraingerAllow

pyarrowbuild to continue on failures (GH#10097) James BourbeauBump

actions/checkoutfrom 3.3.0 to 3.4.0 (GH#10096)Fix

test_set_index_on_emptywithpyarrowstrings active (GH#10054) Irina TruongUn-

xfailpyarrowpickling tests (GH#10082) James BourbeauCI environment file cleanup (GH#10078) James Bourbeau

Un-

xfailmorepyarrowtests (GH#10066) Irina TruongTemporarily skip

pyarrow_compattests with p`andas 2.0 (GH#10063) James BourbeauFix

test_meltwithpyarrowstrings active (GH#10052) Irina TruongFix

test_str_accessorwithpyarrowstrings active (GH#10048) James BourbeauFix

test_better_errors_object_reductionswithpyarrowstrings active (GH#10051) James BourbeauFix

test_loc_with_non_boolean_serieswithpyarrowstrings active (GH#10046) James BourbeauFix

test_valueswithpyarrowstrings active (GH#10050) James BourbeauTemporarily

xfailtest_upstream_packages_installed(GH#10047) James Bourbeau

2023.3.1¶

Released on March 10, 2023

Enhancements¶

Support pyarrow strings in

MultiIndex(GH#10040) Irina TruongImproved support for

pyarrowstrings (GH#10000) Irina TruongFix flaky

RuntimeWarningduring array reductions (GH#10030) James BourbeauExtend

completeextras (GH#10023) James BourbeauRaise an error with

dataframe.convert-string=Trueandpandas<2.0(GH#10033) Irina TruongRename shuffle/rechunk config option/kwarg to

method(GH#10013) James BourbeauAdd initial support for converting

pandasextension dtypes to arrays (GH#10018) James BourbeauRemove

randomgensupport (GH#9987) Eray Aslan

Bug Fixes¶

Skip rechunk when rechunking to the same chunks with unknown sizes (GH#10027) Hendrik Makait

Custom utility to convert parquet filters to

pyarrowexpression (GH#9885) Richard (Rick) ZamoraConsider

numpyscalars and 0d arrays as scalars when padding (GH#9653) Justus MaginFix parquet overwrite behavior after an adaptive

read_parquetoperation (GH#10002) Richard (Rick) Zamora

Maintenance¶

Remove stale hive-partitioning code from

pyarrowparquet engine (GH#10039) Richard (Rick) ZamoraIncrease minimum supported

pyarrowto 7.0 (GH#10024) James BourbeauRevert “Prepare drop packunpack (GH#9994) (GH#10037) Florian Jetter

Have codecov wait for more builds before reporting (GH#10031) James Bourbeau

Prepare drop packunpack (GH#9994) Florian Jetter

Add CI job with

pyarrowstrings turned on (GH#10017) James BourbeauFix

test_groupby_dropna_with_aggforpandas2.0 (GH#10001) Irina TruongFix

test_pickle_roundtripforpandas2.0 (GH#10011) James Bourbeau

2023.3.0¶

Released on March 1, 2023

Bug Fixes¶

Bag must not pick p2p as shuffle default (GH#10005) Florian Jetter

Documentation¶

Minor follow-up to P2P by default (GH#10008) James Bourbeau

Maintenance¶

Add minimum version to optional

jinja2dependency (GH#9999) Charles Blackmon-Luca

2023.2.1¶

Released on February 24, 2023

Note

This release changes the default DataFrame shuffle algorithm to p2p

to improve stability and performance. Learn more here

and please provide any feedback on this discussion.

If you encounter issues with this new algorithm, please see the documentation for more information, and how to switch back to the old mode.

Enhancements¶

Enable P2P shuffling by default (GH#9991) Florian Jetter

P2P rechunking (GH#9939) Hendrik Makait

Efficient dataframe.convert-string support for read_parquet (GH#9979) Irina Truong

Allow p2p shuffle kwarg for DataFrame merges (GH#9900) Florian Jetter

Change

split_row_groupsdefault to “infer” (GH#9637) Richard (Rick) ZamoraAdd option for converting string data to use

pyarrowstrings (GH#9926) James BourbeauAdd support for multi-column

sort_values(GH#8263) Charles Blackmon-LucaGeneratorbased random-number generation in``dask.array`` (GH#9038) Eray AslanSupport

numeric_onlyfor simple groupby aggregations forpandas2.0 compatibility (GH#9889) Irina Truong

Bug Fixes¶

Fix profilers plot not being aligned to context manager enter time (GH#9739) David Hoese

Relax dask.dataframe assert_eq type checks (GH#9989) Matthew Rocklin

Restore

describecompatibility forpandas2.0 (GH#9982) James Bourbeau

Documentation¶

Improving deploying Dask docs (GH#9912) Sarah Charlotte Johnson

More docs for

DataFrame.partitions(GH#9976) Tom AugspurgerUpdate docs with more information on default Delayed scheduler (GH#9903) Guillaume Eynard-Bontemps

Deployment Considerations documentation (GH#9933) Gabe Joseph

Maintenance¶

Temporarily rerun flaky tests (GH#9983) James Bourbeau

Update parsing of FULL_RAPIDS_VER/FULL_UCX_PY_VER (GH#9990) Charles Blackmon-Luca

Increase minimum supported versions to

pandas=1.3andnumpy=1.21(GH#9950) James BourbeauFix

stdto work withnumeric_onlyforpandas2.0 (GH#9960) Irina TruongTemporarily

xfailtest_roundtrip_partitioned_pyarrow_dataset(GH#9977) James BourbeauFix copy on write failure in test_idxmaxmin (GH#9944) Patrick Hoefler

Bump

pre-commitversions (GH#9955) crusaderkyFix

test_groupby_unaligned_indexforpandas2.0 (GH#9963) Irina TruongUn-

xfailtest_set_index_overlap_2forpandas2.0 (GH#9959) James BourbeauFix

test_merge_by_index_patternsforpandas2.0 (GH#9930) Irina TruongBump jacobtomlinson/gha-find-replace from 2 to 3 (GH#9953) James Bourbeau

Fix

test_rolling_agg_aggregateforpandas2.0 compatibility (GH#9948) Irina TruongBump

blackto23.1.0(GH#9956) crusaderkyRun GPU tests on python 3.8 & 3.10 (GH#9940) Charles Blackmon-Luca

Fix

test_to_timestampforpandas2.0 (GH#9932) Irina TruongFix an error with

groupbyvalue_countsforpandas2.0 compatibility (GH#9928) Irina TruongConfig converter: replace all dashes with underscores (GH#9945) Jacob Tomlinson

CI: use nightly wheel to install pyarrow in upstream test build (GH#9873) Joris Van den Bossche

2023.2.0¶

Released on February 10, 2023

Enhancements¶

Update

numeric_onlydefault inquantileforpandas2.0 (GH#9854) Irina TruongMake

repartitiona no-op when divisions match (GH#9924) James BourbeauUpdate

datetime_is_numericbehavior indescribeforpandas2.0 (GH#9868) Irina TruongUpdate

value_countsto return correct name inpandas2.0 (GH#9919) Irina TruongSupport new

axis=Nonebehavior inpandas2.0 for certain reductions (GH#9867) James BourbeauFilter out all-nan

RuntimeWarningat the chunk level fornanminandnanmax(GH#9916) Julia SignellFix numeric

meta_nonemptyindexcreationforpandas2.0 (GH#9908) James BourbeauFix

DataFrame.info()tests forpandas2.0 (GH#9909) James Bourbeau

Bug Fixes¶

Fix

GroupBy.value_countshandling for multiplegroupbycolumns (GH#9905) Charles Blackmon-Luca

Documentation¶

Fix some outdated information/typos in development guide (GH#9893) Patrick Hoefler

Add note about

keep=Falseindrop_duplicatesdocstring (GH#9887) Jayesh MananiAdd

metadetails to dask Array (GH#9886) Jayesh MananiClarify task stream showing more rows than threads (GH#9906) Gabe Joseph

Maintenance¶

Fix

test_numeric_column_namesforpandas2.0 (GH#9937) Irina TruongFix

dask/dataframe/tests/test_utils_dataframe.pytests forpandas2.0 (GH#9788) James BourbeauReplace

index.is_numericwithis_any_real_numeric_dtypeforpandas2.0 compatibility (GH#9918) Irina TruongAvoid

pd.coreimport in dask utils (GH#9907) Matthew RoeschkeUse label for

upstreambuild on pull requests (GH#9910) James BourbeauBroaden exception catching for

sqlalchemy.exc.RemovedIn20Warning(GH#9904) James BourbeauTemporarily restrict

sqlalchemy < 2in CI (GH#9897) James BourbeauUpdate

isortversion to 5.12.0 (GH#9895) Lawrence MitchellRemove unused

skiprowsvariable inread_csv(GH#9892) Patrick Hoefler

2023.1.1¶

Released on January 27, 2023

Enhancements¶

Add

to_backendmethod toArrayand_Frame(GH#9758) Richard (Rick) ZamoraSmall fix for timestamp index divisions in

pandas2.0 (GH#9872) Irina TruongAdd

numeric_onlytoDataFrame.covandDataFrame.corr(GH#9787) James BourbeauFixes related to

group_keysdefault change inpandas2.0 (GH#9855) Irina Truonginfer_datetime_formatcompatibility forpandas2.0 (GH#9783) James Bourbeau

Bug Fixes¶

Fix serialization bug in

BroadcastJoinLayer(GH#9871) Richard (Rick) ZamoraSatisfy

broadcastargument inDataFrame.merge(GH#9852) Richard (Rick) ZamoraFix

pyarrowparquet columns statistics computation (GH#9772) aywandji

Documentation¶

Fix “duplicate explicit target name” docs warning (GH#9863) Chiara Marmo

Fix code formatting issue in “Defining a new collection backend” docs (GH#9864) Chiara Marmo

Update dashboard documentation for memory plot (GH#9768) Jayesh Manani

Add docs section about

no-workertasks (GH#9839) Florian Jetter

Maintenance¶

Additional updates for detecting a

distributedscheduler (GH#9890) James BourbeauUpdate gpuCI

RAPIDS_VERto23.04(GH#9876)Reverse precedence between collection and

distributeddefault (GH#9869) Florian JetterUpdate

xarray-contrib/issue-from-pytest-logto version 1.2.6 (GH#9865) James BourbeauDont require dask config shuffle default (GH#9826) Florian Jetter

Un-

xfaildatetime64Parquet roundtripping tests for newfastparquet(GH#9811) James BourbeauAdd option to manually run

upstreamCI build (GH#9853) James BourbeauUse custom timeout in CI builds (GH#9844) James Bourbeau

Remove

kwargsfrommake_blockwise_graph(GH#9838) Florian JetterIgnore warnings on

persistcall intest_setitem_extended_API_2d_mask(GH#9843) Charles Blackmon-LucaFix running S3 tests locally (GH#9833) James Bourbeau

2023.1.0¶

Released on January 13, 2023

Enhancements¶

Use

distributeddefault clients even if no config is set (GH#9808) Florian JetterImplement

ma.whereandma.nonzero(GH#9760) Erik HolmgrenUpdate

zarrstore creation functions (GH#9790) Ryan Abernatheyiteritemscompatibility forpandas2.0 (GH#9785) James BourbeauAccurate

sizeofforpandasstring[python]dtype (GH#9781) crusaderkyDeflate

sizeof()of duplicate references to pandas object types (GH#9776) crusaderkyGroupBy.__getitem__compatibility forpandas2.0 (GH#9779) James Bourbeauappendcompatibility forpandas2.0 (GH#9750) James Bourbeauget_dummiescompatibility forpandas2.0 (GH#9752) James Bourbeauis_monotoniccompatibility forpandas2.0 (GH#9751) James Bourbeaunumpy=1.24compatability (GH#9777) James Bourbeau

Documentation¶

Remove duplicated

encodingkwarg in docstring forto_json(GH#9796) Sultan OrazbayevMention

SubprocessClusterinLocalClusterdocumentation (GH#9784) Hendrik MakaitMove Prometheus docs to

dask/distributed(GH#9761) crusaderky

Maintenance¶

Temporarily ignore

RuntimeWarningintest_setitem_extended_API_2d_mask(GH#9828) James BourbeauFix flaky

test_threaded.py::test_interrupt(GH#9827) Hendrik MakaitUpdate

xarray-contrib/issue-from-pytest-loginupstreamreport (GH#9822) James Bourbeaupipinstall dask on gpuCI builds (GH#9816) Charles Blackmon-LucaBump

actions/checkoutfrom 3.2.0 to 3.3.0 (GH#9815)Resolve

sqlalchemyimport failures inmindepstesting (GH#9809) Charles Blackmon-LucaIgnore

sqlalchemy.exc.RemovedIn20Warning(GH#9801) Thomas Graingerxfaildatetime64Parquet roundtripping tests forpandas2.0 (GH#9786) James BourbeauReduce size of expected DoK sparse matrix (GH#9775) Elliott Sales de Andrade

Remove executable flag from

dask/dataframe/io/orc/utils.py(GH#9774) Elliott Sales de Andrade

2022.12.1¶

Released on December 16, 2022

Enhancements¶

Support

dtype_backend="pandas|pyarrow"configuration (GH#9719) James BourbeauSupport

cupy.ndarraytocudf.DataFramedispatching indask.dataframe(GH#9579) Richard (Rick) ZamoraMake filesystem-backend configurable in

read_parquet(GH#9699) Richard (Rick) ZamoraSerialize all

pyarrowextension arrays efficiently (GH#9740) James Bourbeau

Bug Fixes¶

Fix bug when repartitioning with

tz-aware datetime index (GH#9741) James BourbeauPartial functions in aggs may have arguments (GH#9724) Irina Truong

Add support for simple operation with

pyarrow-backed extension dtypes (GH#9717) James BourbeauRename columns correctly in case of

SeriesGroupby(GH#9716) Lawrence Mitchell

Documentation¶

Update Prometheus docs (GH#9696) Hendrik Makait

Maintenance¶

Add

zarrto Python 3.11 CI environment (GH#9771) James BourbeauAdd support for Python 3.11 (GH#9708) Thomas Grainger

Bump

actions/checkoutfrom 3.1.0 to 3.2.0 (GH#9753)Avoid

np.bool8deprecation warning (GH#9737) James BourbeauMake sure dev packages aren’t overwritten in

upstreamCI build (GH#9731) James BourbeauAvoid adding

data.h5andmydask.htmlfiles during tests (GH#9726) Thomas Grainger

2022.12.0¶

Released on December 2, 2022

Enhancements¶

Remove statistics-based

set_indexlogic fromread_parquet(GH#9661) Richard (Rick) ZamoraAdd support for

use_nullable_dtypestodd.read_parquet(GH#9617) Ian RoseFix

map_overlapin order to accept pandas arguments (GH#9571) Fabien AulaireFix pandas 1.5+

FutureWarningin.str.split(..., expand=True)(GH#9704) Jacob HayesEnable column projection for

groupbyslicing (GH#9667) Richard (Rick) ZamoraImprove error message for failed backend dispatch call (GH#9677) Richard (Rick) Zamora

Bug Fixes¶

Revise meta creation in arrow parquet engine (GH#9672) Richard (Rick) Zamora

Fix