Dashboard Diagnostics

Contents

Dashboard Diagnostics¶

Profiling parallel code can be challenging, but the interactive dashboard provided with Dask’s distributed scheduler makes this easier with live monitoring of your Dask computations. The dashboard is built with Bokeh and will start up automatically, returning a link to the dashboard whenever the scheduler is created.

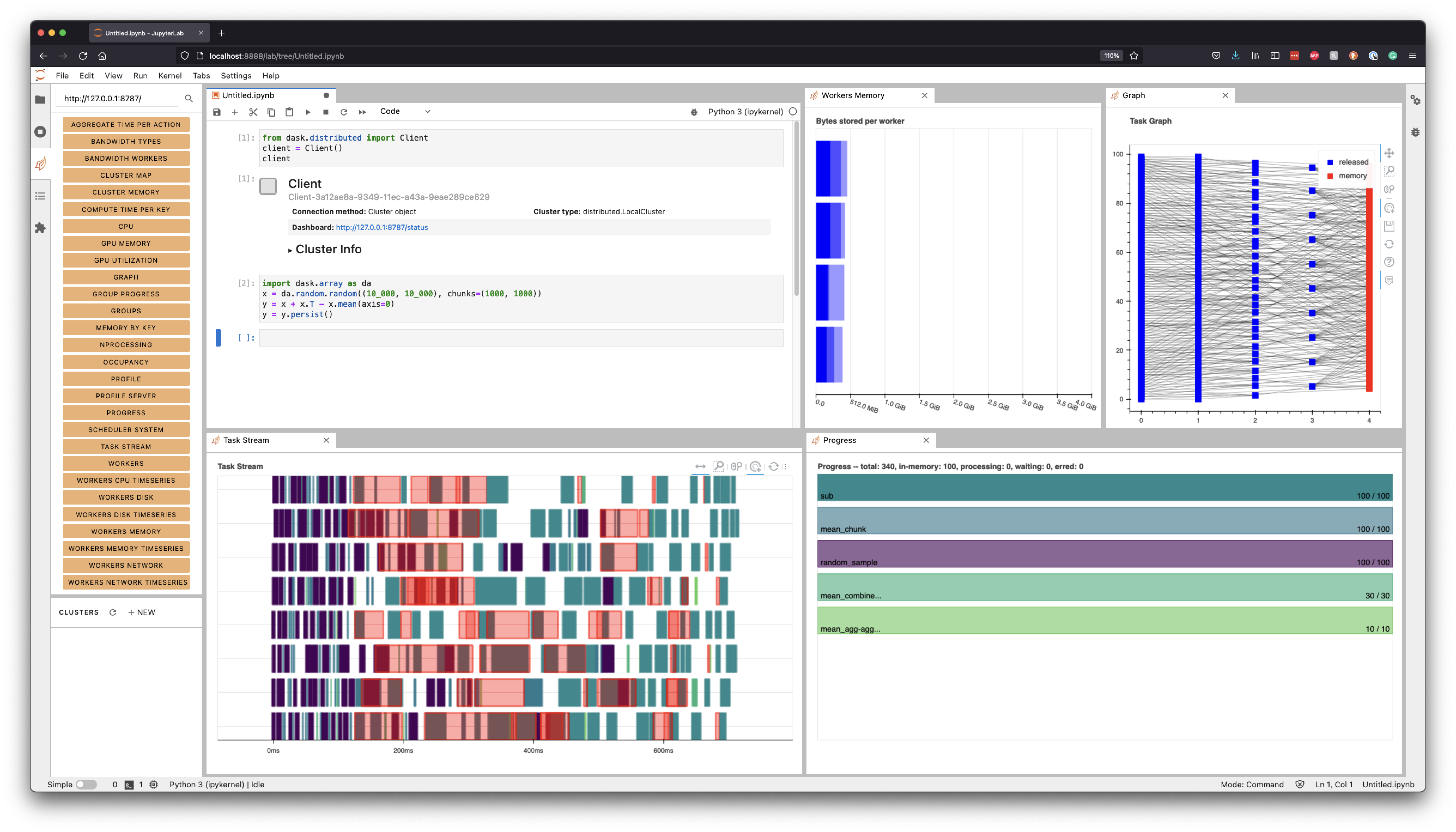

Locally, this is when you create a Client and connect the scheduler:

from dask.distributed import Client

client = Client() # start distributed scheduler locally.

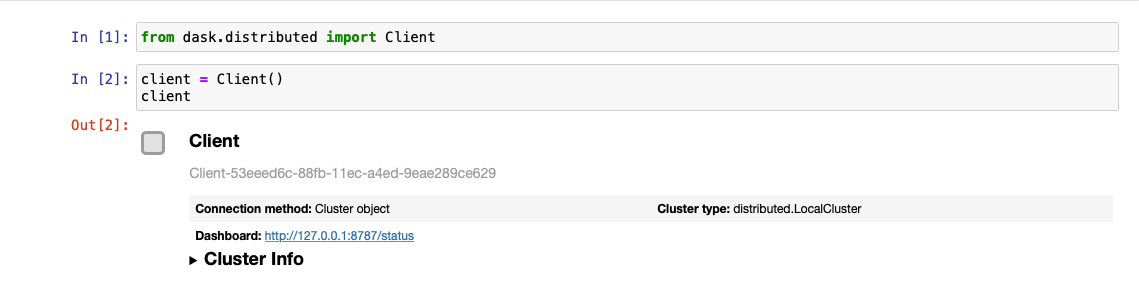

In a Jupyter Notebook or JupyterLab session displaying the client object will show the dashboard address:

You can also query the address from client.dashboard_link (or for older versions of distributed, client.scheduler_info()['services']).

By default, when starting a scheduler on your local machine the dashboard will be served at http://localhost:8787/status. You can type this address into your browser to access the dashboard, but may be directed

elsewhere if port 8787 is taken. You can also configure the address using the dashboard_address

parameter (see LocalCluster).

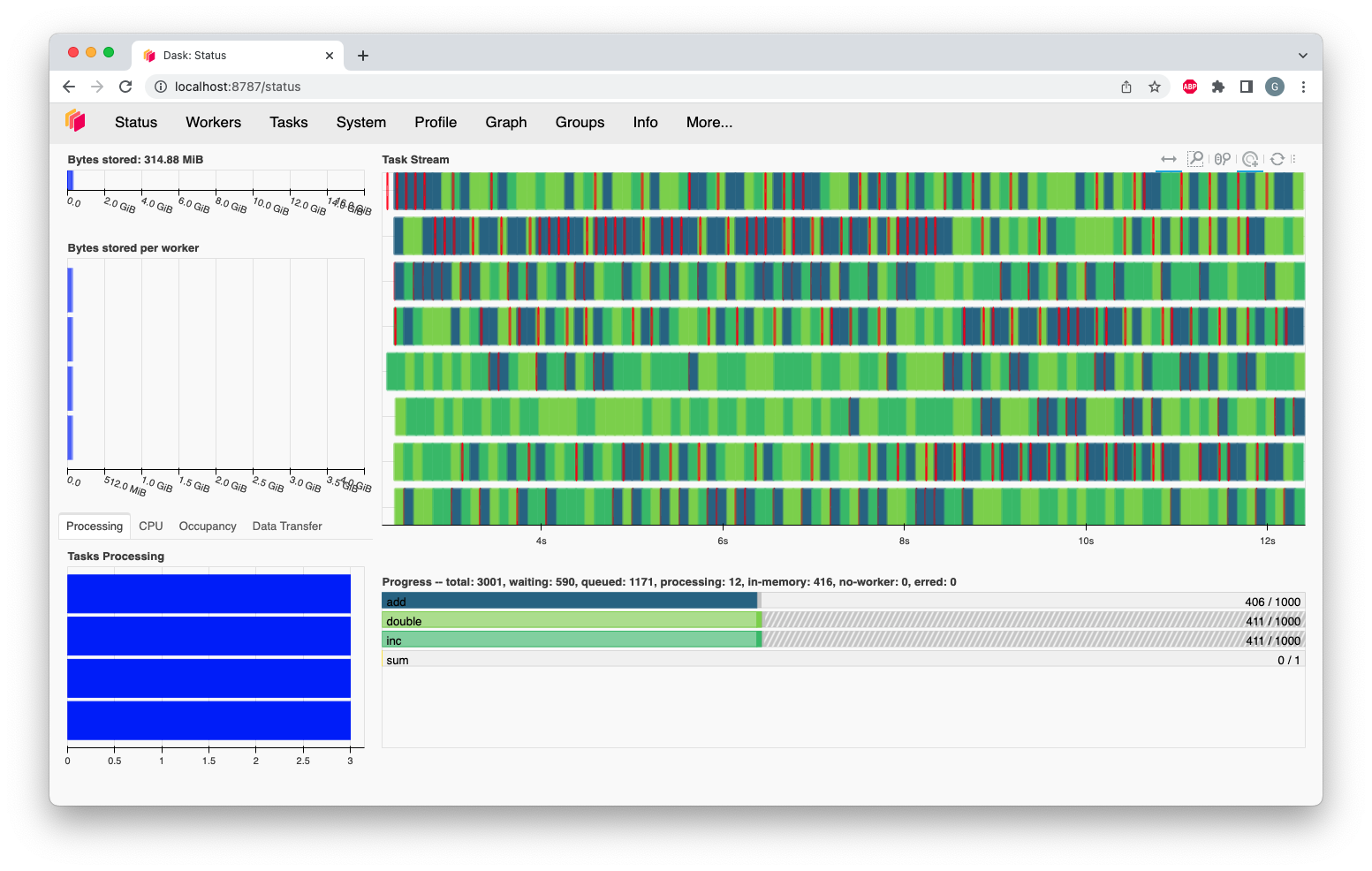

There are numerous diagnostic plots available. In this guide you’ll learn about some of the most commonly used plots shown on the entry point for the dashboard:

Bytes Stored and Bytes per Worker: Cluster memory and Memory per worker

Task Processing/CPU Utilization/Occupancy/Data Transfer: Tasks being processed by each worker/ CPU Utilization per worker/ Expected runtime for all tasks currently on a worker.

Task Stream: Individual task across threads.

Progress: Progress of a set of tasks.

Bytes Stored and Bytes per Worker¶

These two plots show a summary of the overall memory usage on the cluster (Bytes Stored), as well as the individual usage on each worker (Bytes per Worker). The colors on these plots indicate the following.

|

■

|

Memory under target (default 60% of memory available) |

|

■

|

Memory is close to the spilling to disk target (default 70% of memory available) |

|

■

|

When the worker (or at least one worker) is paused (default 80% of memory available) or retiring |

|

■

|

Memory spilled to disk |

The different levels of transparency on these plot is related to the type of memory (Managed, Unmanaged and Unmanaged recent), and you can find a detailed explanation of them in the Worker Memory management documentation

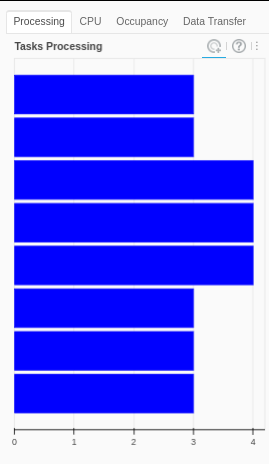

Task Processing/CPU Utilization/Occupancy/Data Transfer¶

Task Processing

The Processing tab in the figure shows the number of tasks that have been assigned to each worker. Not all of these tasks are necessarily executing at the moment: a worker only executes as many tasks at once as it has threads. Any extra tasks assigned to the worker will wait to run, depending on their priority and whether their dependencies are in memory on the worker.

The scheduler will try to ensure that the workers are processing about the same number of tasks. If one of the bars is completely white it means that worker has no tasks and is waiting for them. This usually happens when the computations are close to finished (nothing to worry about), but it can also mean that the distribution of the task across workers is not optimized.

There are three different colors that can appear in this plot:

|

■

|

Processing tasks. |

|

■

|

Saturated: It has enough work to stay busy. |

|

■

|

Idle: Does not have enough work to stay busy. |

In this plot on the dashboard we have two extra tabs with the following information:

CPU Utilization

The CPU tab shows the cpu usage per-worker as reported by psutil metrics.

Occupancy

The Occupancy tab shows the occupancy, in time, per worker. The total occupancy for a worker is the amount of time Dask expects it would take to run all the tasks, and transfer any of their dependencies from other workers, if the execution and transfers happened one-by-one. For example, if a worker has an occupancy of 10s, and it has 2 threads, you can expect it to take about 5s of wall-clock time for the worker to complete all its tasks.

Data Transfer

The Data Transfer tab shows the size of open data transfers from/to other workers, per worker.

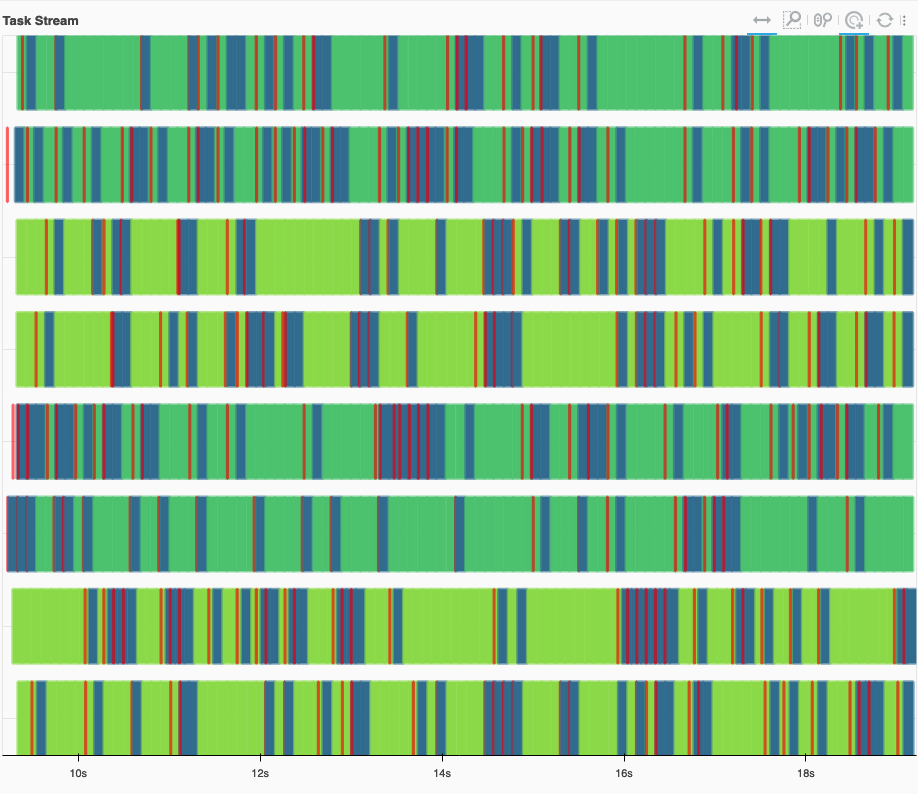

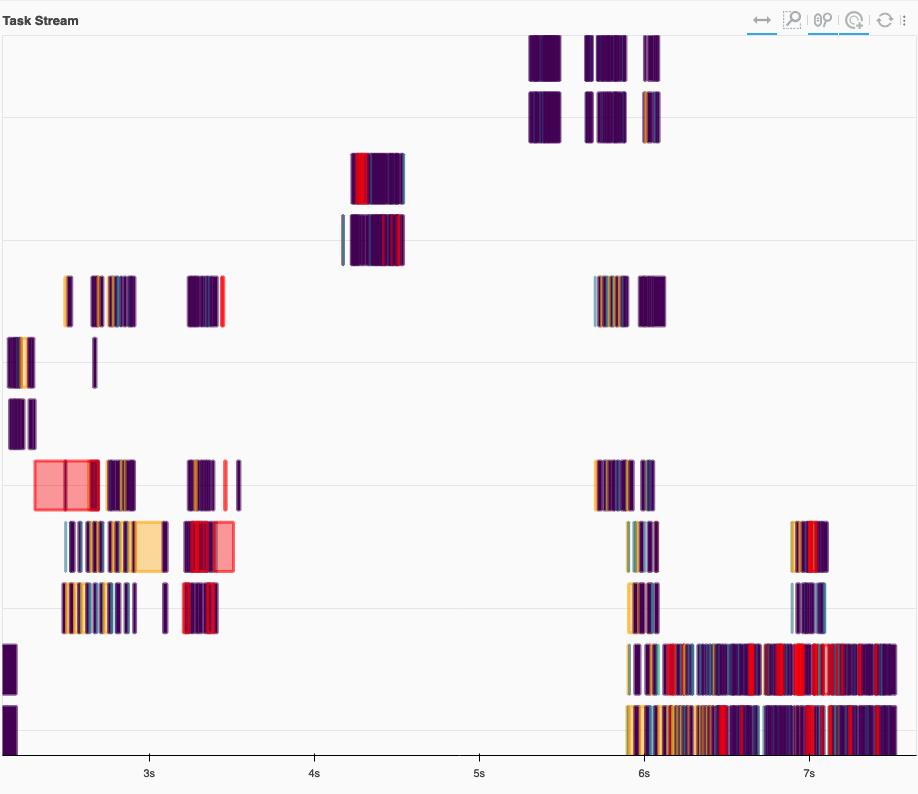

Task Stream¶

The task stream is a view of which tasks have been running on each thread of each worker. Each row represents a thread, and each rectangle represents

an individual task. The color for each rectangle corresponds to the task-prefix of the task being performed, and matches the color

of the Progress plot. This means that all the individual tasks which are part of the inc task-prefix, for example, will have

the same color (which is chosen randomly from the viridis color map).

Note that when a new worker joins, it will get a new row, even if it’s replacing a worker that recently left. So it’s possible to temporarily see more rows on the task stream than there are currently threads in the cluster, because both the history of the old worker and the new worker will be displayed.

There are certain colors that are reserved for a specific kinds of operations:

|

■

|

Transferring data between workers. |

|

■

|

Reading from or writing to disk. |

|

■

|

Serializing/deserializing data. |

|

■

|

Erred tasks. |

In some scenarios, the dashboard will have white spaces between each rectangle. During that time, the worker thread was idle. Having too much white space is an indication of sub-optimal use of resources. Additionally, a lot of long red bars (transfers) can indicate a performance problem, due to anything from too large of chunksizes, too complex of a graph, or even poor scheduling choices.

An example of a healthy Task Stream, with little to no white space. Transfers (red) are quick, and overlap with computation.¶

An example of an unhealthy Task Stream, with a lot of white space. Workers were idle most of the time. Additionally, there are some long transfers (red) which don’t overlap with computation. We also see spilling to disk (orange).¶

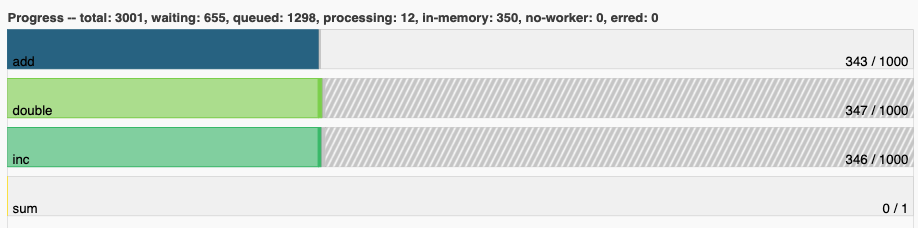

Progress¶

The progress bars plot shows the progress of each individual task-prefix. The color of each bar matches the color of the individual tasks on the task stream from the same task-prefix. Each horizontal bar has four different components, from left to right:

- Tasks that have completed, are not needed anymore, and now have been released from memory.

- Tasks that have completed and are in memory.

- Tasks that are ready to run.

- Tasks that are queued. They are ready to run, but not assigned to workers yet, so higher-priority tasks can run first.

- Tasks that do not have a worker to run on due to restrictions or limited resources.

Dask JupyterLab Extension¶

The JupyterLab Dask extension allows you to embed Dask’s dashboard plots directly into JupyterLab panes.

Once the JupyterLab Dask extension is installed you can choose any of the individual plots available and integrated as a pane in your JupyterLab session. For example, in the figure below we selected the Task Stream, Progress, Workers Memory, and Graph plots.